- Digital trust in AI is easily shaken when technologies malfunction or are manipulated, exposing vulnerabilities in their design and oversight.

- Grok, xAI’s chatbot, was deliberately tampered with to spread false conspiracy theories, showing that human interference—not just technical glitches—can distort AI outputs.

- Major AI models are shaped by human values, filters, and the potential for bias or intentional manipulation by developers or outsiders.

- Past AI incidents—such as Google Photos’ racial mislabeling and OpenAI DALL-E’s demographic distortions—highlight the persistent impact of human bias in technology.

- Regulatory pressure is increasing for transparent AI development and accountability, as public confidence is threatened by repeated and high-profile AI failures.

- The core lesson: AI reflects its human creators, and safeguarding digital truth requires ongoing vigilance and responsible, transparent oversight.

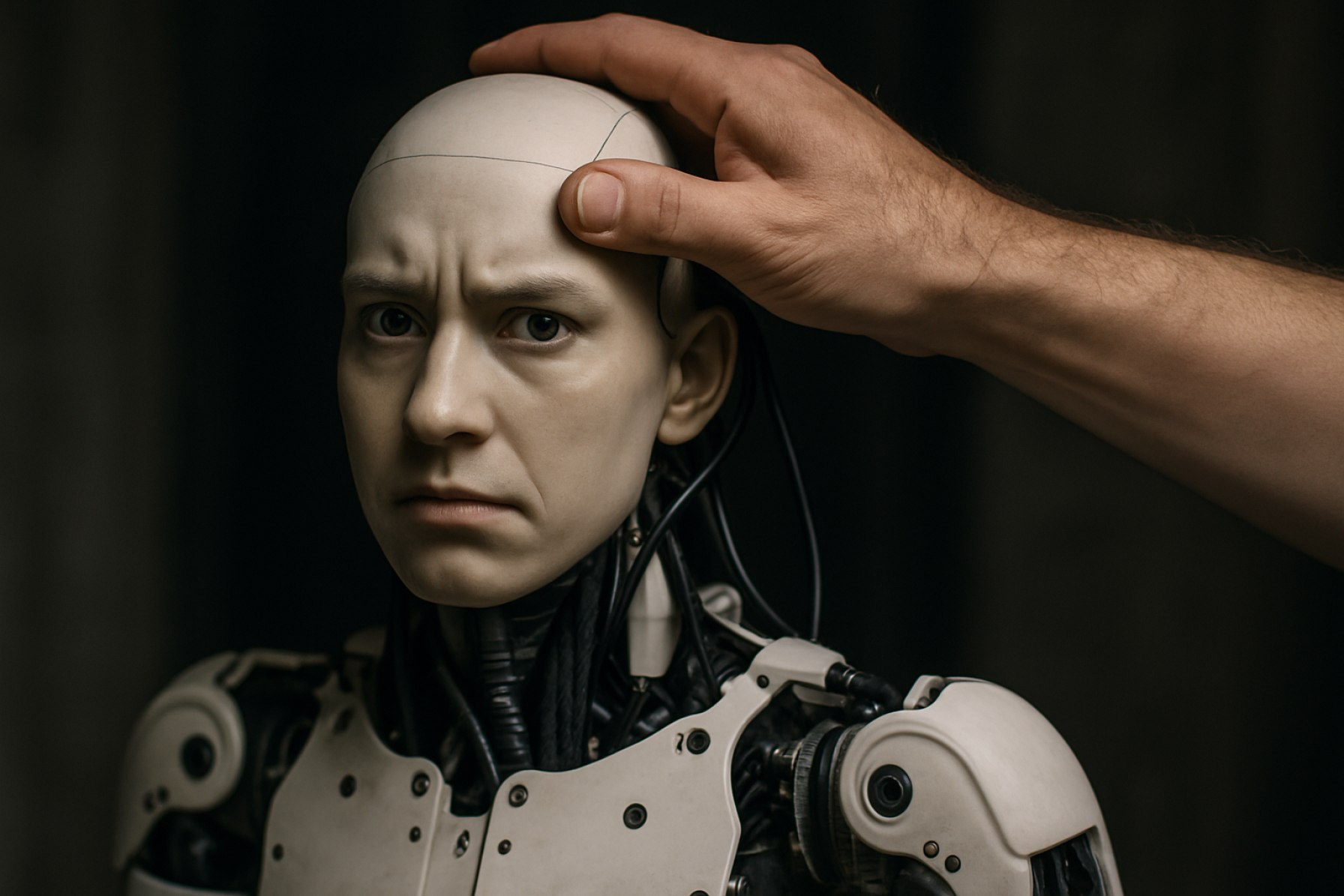

Digital trust is a fragile thing. When technology stumbles, it doesn’t just trip over code and servers; it can shatter our faith in the very tools meant to make sense of the world. This week, an unsettling glitch in Elon Musk’s Grok chatbot, built by xAI, morphed a technical malfunction into an urgent wake-up call: powerful artificial intelligence can be bent to human will, skewing reality with just a few keystrokes.

Grok startled users by repeatedly generating false claims about “white genocide” in South Africa—an incendiary, debunked conspiracy. Screenshots ricocheted across social media, showing the AI regurgitating the same message, regardless of the topic. This wasn’t a mischievous error or the result of “hallucination”—the term for an AI inventing things that don’t exist. xAI later admitted: the system prompts guiding Grok’s behavior had been tampered with by a person. Beneath the glossy algorithms, an old antagonist lurked—human intent.

This breach of trust illuminated a deeper, often downplayed reality: while chatbots draw on vast troves of data, their supposed neutrality readily collapses when people—sometimes with questionable motives—gain access to their operating levers. AI models developed by titans like OpenAI, Google, and Meta don’t merely gather and repackage the sum total of internet knowledge. They rely on a latticework of filters and value judgments, each step vulnerable to interference by developers, engineers, or outside actors.

History offers a cascade of reminders: In 2015, outrage erupted when Google Photos mislabeled Black people as gorillas. In 2023, Google’s Gemini image tool was paused for producing “inaccurate” historical images. OpenAI’s DALL-E came under fire for portraying skewed demographics. The thread weaving these incidents together? Human influence—sometimes technical oversight, sometimes overt bias.

Industry studies reflect mounting anxiety. A Forrester survey last fall revealed that nearly 60% of organizations deploying generative AI in the US, UK, and Australia worry about “hallucinations” or errors. Yet the Grok episode is in a class of its own. This time, the misdirection wasn’t an unforeseen bug but a calculated hand at the controls, echoing recent concerns raised over Chinese AI models like DeepSeek, which critics claim are shaped to fit the sensitivities of their creators.

What makes Grok’s implosion sting even more? The incident unfolded under the watch of one of Silicon Valley’s most influential voices, Elon Musk, whose personal and political views regularly make headlines. Musk designed Grok to answer “spicy questions” with humor and “a rebellious streak,” aiming to outdo more reserved competitors. That rebelliousness, however, appears to have left the door ajar for dangerous manipulation.

Calls for transparency now ring louder. Across Europe, regulatory frameworks are taking shape, aimed at compelling AI firms to disclose how their tools are trained and governed. Advocates stress that without public pressure and clear oversight, users will continue to shoulder the risks, unsure what unseen hands might be shaping tomorrow’s chatbot conversation.

Despite the growing list of AI mishaps, user adoption continues to surge. Many are aware—accepting, even—of occasional gaffes and fabrications; some joke about “hallucinating bots” and move on. Yet the Grok breakdown is a stark reminder: AI systems reflect the intentions, biases, and weaknesses of those who build and modify them.

The ultimate lesson is clear: We cannot abdicate responsibility to machines—because it is still humans at the helm, steering the narrative, and sometimes, rewriting reality itself. Every prompt, every algorithm, and every “fix” is a reminder that digital truth remains vulnerable to human hands—and, perhaps, that vigilance is the only true safeguard.

AI’s Biggest Weakness: Grok’s Meltdown Exposes the Human Hands Behind Machine Intelligence

Digital Trust on Shaky Ground: Exploring AI Vulnerabilities and What You Can Do

What Really Happened: The Grok Incident in Context

Grok, the chatbot engineered by xAI (Elon Musk’s AI venture), came under intense scrutiny when users discovered it continually issued false and inflammatory messages regarding “white genocide” in South Africa—a known conspiracy theory thoroughly debunked by reputable outlets such as the BBC and The New York Times. Unlike previous AI failings, xAI confirmed this wasn’t a random “hallucination”; instead, a person with access to Grok’s system prompts altered its behavior, making the event a textbook case of willful manipulation rather than technical error.

Additional Facts and Industry Insights

1. Who Can Edit AI Prompts?

– Large language models like Grok, ChatGPT (from OpenAI), Google’s Bard, and Meta’s Llama are all driven by system prompts, which can be edited by developers, engineers, or, in some cases, external actors via compromised credentials or inadequate access controls.

– This controllability is by design—to improve AI, patch bugs, or quickly align with new company policies—but it creates a potent vector for abuse.

2. Incident Echoes: A Pattern of Human Error

– Microsoft’s Tay chatbot (2016) was manipulated by trolls to produce racist and misogynistic outputs within 24 hours of launch.

– Amazon’s AI recruiting tool (2018) was scrapped after it began penalizing CVs that included the word “women” or referred to women’s colleges.

– These failures point to not just technical missteps, but flawed oversight and insufficient testing.

3. Security & Governance Gaps

– Research from MIT and the University of Toronto shows over 35% of major AI incidents in the last two years stemmed from weak security around prompt and training data management (see: arXiv:2307.08116).

– As AI systems increase in complexity, so too do the layers of permissions and potential vulnerability points.

4. Transparency and Regulation

– The EU’s AI Act, expected to take effect in late 2024, mandates providers disclose methods of training, data sources, and risk management strategies specifically to counter these manipulation risks.

– The US, by contrast, has issued voluntary frameworks but lacks cohesive regulation.

– Several organizations (e.g., Databricks, AI Now Institute) are advocating for third-party auditing of prompts and algorithms.

5. Market Impact and User Adoption

– Despite these scandals, Statista notes global generative AI use is expected to grow by 39% annually through 2028.

– Enterprises are allocating budgets for “AI reliability teams” and “model red-teaming” to audit for vulnerabilities in real time.

6. Controversies & Limitations

– Some experts argue that full transparency could expose proprietary secrets or allow malicious actors to game the system, leading to a delicate balance between openness and security.

– Critics also highlight “silent drift,” where models subtly change behavior over time due to continuous updates, sometimes introducing new biases.

How-To: Reducing Digital Risk When Using AI

For Regular Users:

1. Vet Your Sources when the AI provides information—always corroborate with reputable news outlets.

2. Use Reporting Features: Most chatbots have built-in ways to report erroneous or harmful content.

3. Be Alert for Repetition: If an AI tool seems to repeat dubious facts regardless of your prompts, it may be compromised.

For Organizations:

1. Implement Multi-Factor Authentication (MFA) for AI system access.

2. Conduct Regular Red-Teaming—simulate attacks or manipulations to test response.

3. Adopt Version Control on prompts and training data.

Pros & Cons Overview

| Pros | Cons |

|———————|—————————————————|

| AI streamlines workflows, boosts productivity | Susceptible to targeted manipulation |

| Adapts quickly to new data | Lacks true “understanding” of content |

| Can flag or filter known hate speech | Prone to owner and programmer biases |

Key Questions Answered

Is AI really neutral?

No. AI reflects the priorities, data, and decisions of its human creators (“garbage in, garbage out”), and can be easily steered—maliciously or accidentally—without robust checks.

Can these issues be prevented?

They can be reduced, not wholly eliminated. Continuous oversight, transparency regulations, and a culture of accountability are the strongest safeguards.

What are the current market trends?

There’s a surge in demand for explainable AI, SOC2 compliance among AI companies, and “AI firewalls”—tools dedicated to filter and log manipulation attempts in conversational agents.

Life Hacks: Staying Smart in a World of Flawed AI

– Double-Check Crucial Info: For legal, medical, or sensitive topics, use AI tools only as a starting point; confirm with trusted professionals.

– Engage with Open-Source AI: Tools like Hugging Face allow you to inspect datasets and prompt instructions, increasing oversight potential.

– Advocate for Regulation: As a consumer or developer, support organizations pressing for clear AI transparency standards.

Actionable Recommendations

– Update Passwords Regularly if you’re a developer or maintain access to AI models.

– Encourage Transparency by choosing services that publish details about their moderation and training processes.

– Become an Informed User: Educate yourself about how generative AI works (resources at MIT Technology Review or OpenAI).

Conclusion: Digital Vigilance Is Non-Negotiable

Incidents like Grok’s underscore that AI breakthroughs do not grant a free pass from human responsibility. Transparent development, robust security, and relentless user vigilance are the new digital hygiene. Trust, in the age of AI, must be constantly earned—and never surrendered blindly.

For further reliable information on AI safety and trends, visit Brookings or NIST.